How to Secure Your Cloud-Hosted App Infrastructure

To properly secure cloud-hosted apps and data, orgs need a strong understanding of the tools and technologies available, plus the skills needed to use them.

Christina Harker, PhD

Marketing

Adopting cloud computing as the primary environment for software infrastructure means fundamentally shifting how an organization views and approaches security. Infrastructure security must be comprehensive, covering both public-facing and internally facing assets, with a strong focus on zero-trust architecture. Legacy methods of securing compute resources no longer work in a borderless network topology. Additionally, the tools and skill-sets needed to effectively manage and secure cloud resources are vastly different. The question left to ask by any organization making the move: are they ready?

How is Cloud Infrastructure Security Different?

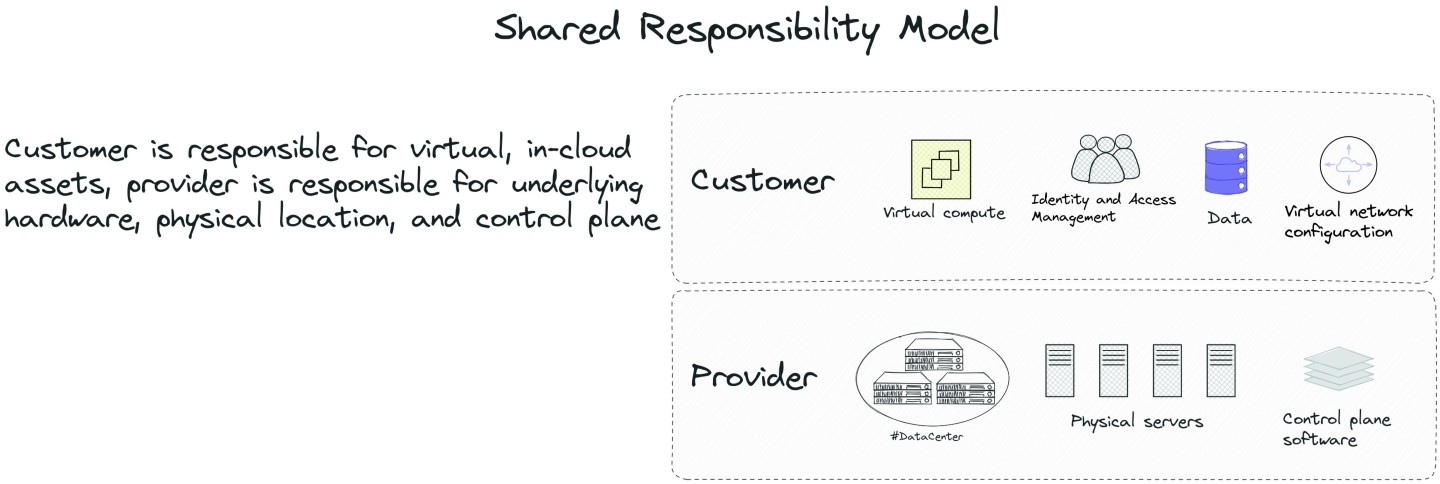

Understanding infrastructure security in the cloud starts with understanding security in the cloud, and how it differs from traditional on-premise environments. Building this understanding starts with a fundamental premise: the shared responsibility model.

In a hypothetical on-premise model, a company owns (or leases) a physical space and owns and manages all of the computing resources within. The network, servers, storage devices, and workstations are all under the purview of the company's operations staff, software engineers, and information security teams. They are completely responsible for all facets of securing their digital infrastructure.

In contrast, the shared responsibility model represents a framework in which there is an explicit separation of security ownership between a customer and a cloud services provider. Although different providers have different models and language around this, the general idea is that the providers are responsible for securing the underlying hardware, physical locations, and administrative software interfaces, while customers are responsible for securing their applications, data, and compute resources that run on top of it. An important point to consider in the context of the shared responsibility model is that lax security doesn't just present a risk to a specific organization's data and assets; due to the shared tenancy of most cloud resources, providers are often obligated to suspend or restrict entire accounts if they represent a security risk to other customers (tenants) or behave as "noisy neighbors" by exceeding resource or bandwidth quotas.

Cloud resources also occupy a very different network topology from what is present in on-premise environments. There is, at best, a fuzzy network boundary and newly provisioned resources are very likely to be publicly accessible from the internet by default. In addition, a cloud environment is dynamic: modern software development involves fast-feature iteration. Infrastructure is constantly created and destroyed in response to elastic user demand, and legacy security tooling and processes were conceived to address a very different threat landscape.

Effective Security When Everything is Code

In more traditional on-premise environments, "code" was software engineering that remained solely in the realm of application development, but infrastructure provisioning, configuration, and security best practices were largely outside its scope. Infrastructure itself was managed through a series of highly manual processes, often involving server images or physical hardware that had to be configured with separate sets of changes and updates. In the cloud, that changed completely.

In any of the major cloud platforms, nearly everything can be defined as code: infrastructure, policy configurations, identity and access (IAM), monitoring tools and configuration, pager rotations, and more. While most initial forays into provisioning cloud resources usually involve a GUI or console interface; at any kind of meaningful scale managing everything with code is an absolute necessity. Code enables leverage: use an Infrastructure-as-Code (IaC) tool to define an application stack once, and the module can be reused over and over as needed.

There are several choices available for any organization that wants to adopt an "everything is code" approach. Popular IaC tools including: Terraform, CDK, Cloudformation, and Pulumi. Terraform is a solid choice as it supports the major cloud providers, as well as several PaaS/SaaS vendors that provide supporting services like monitoring, paging, and analytics. The declarative syntax means that users can define what infrastructure they want, and the tool will determine the best path forward to correctly provision the individual resources.

Assuming an organization decides to implement Terraform, what's the next step? Initially, there needs to be some ramp time for infrastructure or cloud engineers to gain some proficiency with the tooling. The scope of this article necessarily leaves out a lot of the contextual information around how to actually use a tool like Terraform and deploy infrastructure with it. Once the team is comfortable, it's time to scale, and this is where the real evolutionary leap happens for how an organization manages their application infrastructure.

However, defining everything in code represents a somewhat double-edged sword, since traditional information security tools largely no longer apply. If everything is code, everything must be secured like code. This means automated linting and static analysis, policy enforcement with tools like OPA and Sentinel, and if possible more extensive unit and integration tests. It's not enough to actively scan for vulnerabilities and misconfiguration in live workloads; these types of issues need to be caught and remediated before they make it to production environments. Managing this workflow at scale will require use of CI/CD infrastructure to transform once disparate parts of software development into a cohesive and automated workflow.

Shifting from a paradigm of manual security configuration of long-lived servers to end-to-end security of the entire software-development-lifecycle can be difficult for traditional ops and admin teams. The pattern of fast feedback and continuous improvement is the backbone of the DevOps model, but that requires organizations to potentially undergo significant changes to their staff, technical acumen, and development culture.

Securing a Production Environment that's Always Changing

Classic on-premise network environments saw little net change from day to day or week to week. Hardware servers were costly and had long integration cycles before they could be utilized for production workloads, which gave operations engineers and system administrators ample time to secure and configure each server for a given workload. In a cloud environment, new nodes may be created and destroyed by the hundreds and thousands multiple times per day. Additionally, technologies like containerization add a new wrinkle of security complexity to the runtime environment, presenting challenges that aren't present with traditional servers or virtual machines.

Attempting to properly configure, secure, and monitor these workloads, as well as the environments they run in, is an impossible challenge without the correct supporting infrastructure and processes.

Service Discovery

Service discovery enables services running in a distributed environment to discover and communicate with each other automatically. In the context of potentially hundreds or thousands of compute nodes, manual intervention and configuration to enable this communication would be overly cumbersome and inefficient. Tools like Consul, ZooKeeper, and Eureka are popular choices. Automatic service discovery means new resources can automatically be registered with monitoring systems and assigned relevant configuration and security policy.

Monitoring

Much more detailed and holistic monitoring and observability tooling is needed to understand application and infrastructure behavior in complex distributed systems like the cloud, and to quickly identify and isolate anomalies that may indicate compromise. DataDog, Honeycomb, and NewRelic are some of the platforms that have seen broad industry adoption.

Containers

In the modern cloud, containers have become ubiquitous. Docker has seen broad adoption as the container engine of choice, and Kubernetes has quickly become the standard for container orchestration. However, as mentioned previously, they present new and different security challenges compared to traditional workloads, and the entire development and deployment lifecycle involving container-based workloads should be monitored carefully. Tools like Trivy can be used to scan containers and base images for vulnerabilities earlier in the software development lifecycle (SDLC). Integration test environments should be configured to carefully monitor new container deployments for anomalous behavior, and production workloads require careful configuration to avoid glaring security vulnerabilities.

Cloud Infrastructure Security is About Addressing New Challenges

Cloud environments present challenges and scenarios that are unlike what exists in an on-premise setting. New skillsets and tooling are needed to shift to a very code/development-heavy focus. Hiring or outsourcing is definitely on the table for most organizations that have traditionally utilized non-cloud compute. Effective security becomes much more about proactive protection in a zero-trust environment versus re-actively monitoring legacy infrastructure. The cloud can unlock numerous benefits of scale and speed, but software organizations should ensure they're ready to utilize it safely and effectively.

Related posts

Cloud ComplianceCloud SecurityCloud Management

Cloud Security Challenges Organizations Need To Overcome

Migrating on-premise infrastructure to the cloud is a top priority for many organizations today. The cloud offers a number of benefits, including scalability, flexibility and cost savings. However, many organizations are unprepared for the security challenges that come with cloud adoption. In this blog post, we will discuss some of the cloud security challenges that organizations need to overcome.